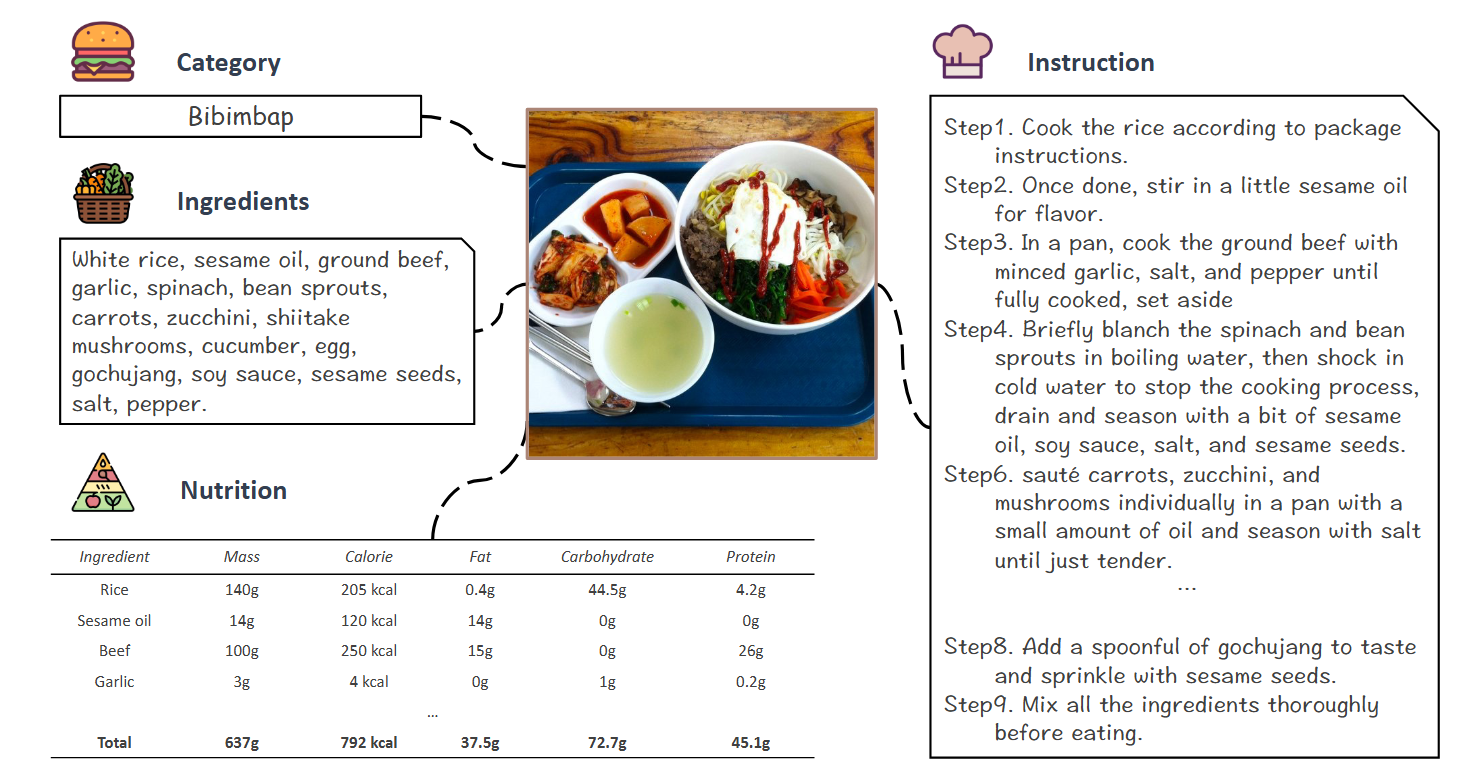

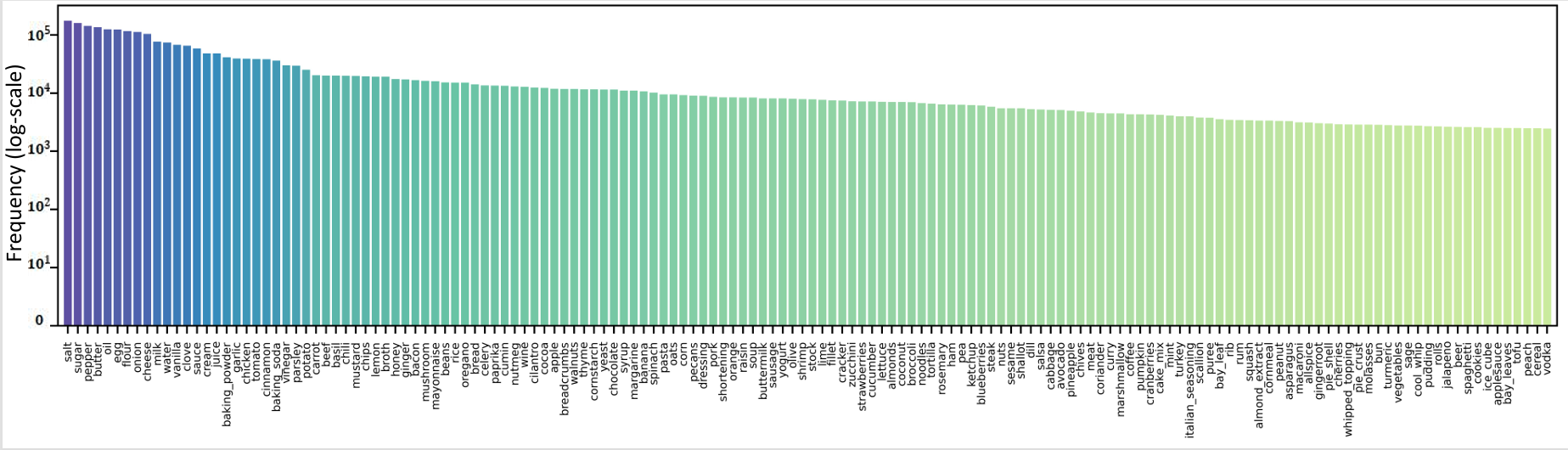

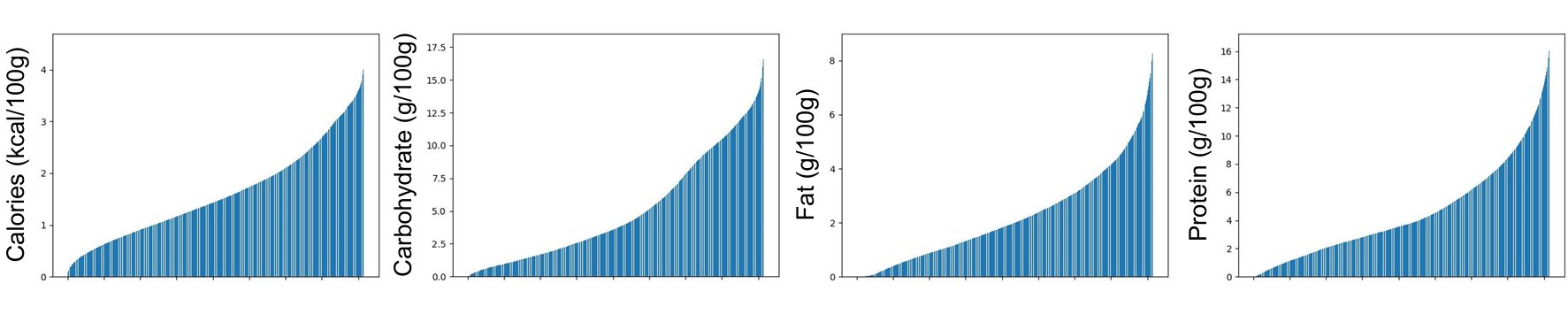

Food is a fundamental component of the human experience, significantly influencing health and well-being. Great progress has been achieved in understanding food composition and nutritional content from images in the past few years. However, existing food datasets are generally constrained to specific tasks, such as food classification or recipe generation, and lack comprehensive annotations for Food-Nutrition Analysis, which results in suboptimal performance. To bridge this gap, we introduce UniFood, the first unified large-scale food dataset for comprehensive Food and Nutrition Analysis. UniFood contains 501,533 food images, each enriched with annotations of food categories, ingredients, cooking instructions, and both ingredient-level and image-level nutrition information. The dataset is constructed by integrating and expanding existing food datasets with a robust sampling strategy and enhanced annotations facilitated by the remarkable capabilities of GPT4-V. The experiments demonstrate that UniFood not only provides a detailed comparative analysis across various food-related tasks but also sets a new benchmark for the field, highlighting the transformative potential of AI in advancing Food and Nutrition Analysis.